Five UX Guidelines to Embed AI in Your Enterprise Applications

- Product Definition /

- Product Design /

Artificial intelligence adoption has exploded in recent years. According to IBM, 77% of businesses have already adopted AI or have an adoption plan. Tasks being automated by AI are also rapidly evolving as access to AI tools expands through user friendly tools such as ChatGPT. Computer vision AI can now reliably inspect products on an assembly line. Conversational AI chatbots engage with customers through natural language. And reinforcement learning algorithms even beat human champions at complex games like Go.

In my post about how to integrate AI APIs in your enterprise applications, I explained how easy it can be to begin integrating AI functionality into your applications. It is also important to address how implementing AI affects your application’s user experience, particularly in regard to trust and control issues between your users and the AI-powered applications. We are, after all, asking a machine to make decisions or complete tasks that typically would be handled by a human. Without insight as to why or how a machine is making a decision for us, we must rethink the ramifications of this choice. AI governance, which concerns itself with the how and why of AI decision making, is still in its infancy.

As Andrew Moore explains, “at its core, AI is about automating judgments that have previously been the exclusive domains of humans. This is a significant challenge unto itself, of course, but it brings with it significant risk as well. Increasing effort, for instance, is required to make the decisions of AI systems more transparent and understandable in human terms.”

With AI permeating the enterprise, applications must evolve to allow seamless integration between human workers and AI capabilities. User experience is key for mass adoption. Applications should enable humans and AI algorithms to complement each other, with each focusing on their respective strengths. This article outlines UX guidelines to consider when embedding AI in enterprise applications. The concepts help ensure human workers develop intuitive trust in AI systems by providing transparency, oversight and understanding.

1) Notify users about which tasks involve AI automation

Today, AI is automating a wide range of jobs from visual inspection in manufacturing to analyzing legal contracts, even assisting with the drafting and negotiation of complex AI contracts. With advancements in computer vision and natural language processing, AI can complete increasingly complex tasks.

Enterprise applications should clearly indicate where AI is automating a task or process. For example, an inventory management system may use computer vision AI to detect missing or defective items. The system should note which processes are handled by the AI vision algorithms versus human workers.

Transparency into AI automation enables users to properly calibrate their expectations. Users need to understand when variation in output could be caused by an evolving machine learning model versus a human coworker’s work. Clear notifications set appropriate context.

2) Indicate which data is derived from AI

Machine learning algorithms can detect patterns and insights in data that humans may miss. However, users are often skeptical of insights from a “black box” system.

New techniques in explainable AI can provide transparency into AI-generated data. For example, natural language models can explain which input text triggered a particular output. Image classification models can highlight visual regions that led to a prediction.

Enterprise applications should visually differentiate any data or predictions generated by AI versus traditional methods. Users can then inspect the AI-generated data and determine whether to trust and act upon it.

Providing AI explainability builds user confidence. Over time, users will better understand the capabilities and limitations of the machine learning models powering the AI.

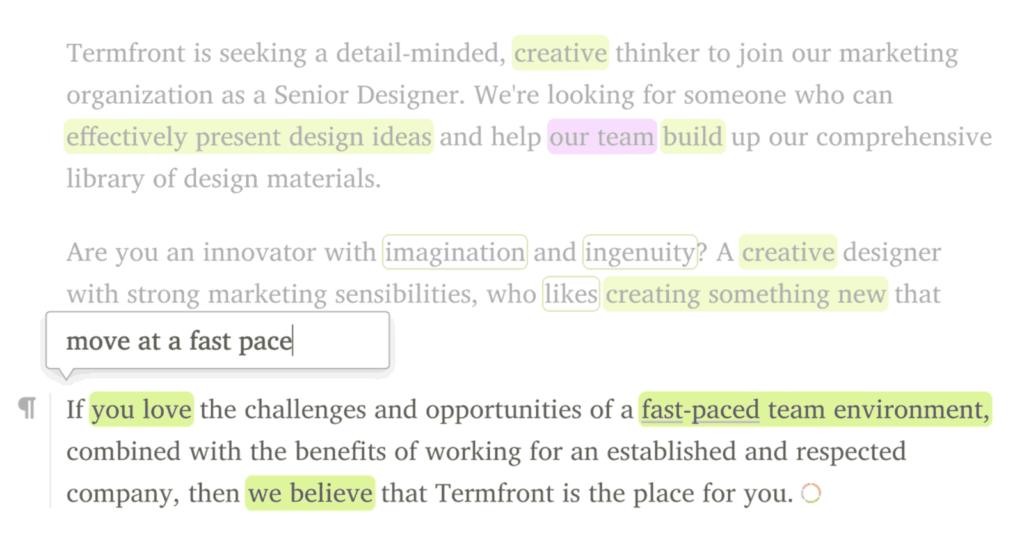

For example, Textio provides real-time input to job descriptions as they are being written (as well as other tools like a plagiarism checker). It is essential that any changes to the text are provided in a clear, yet unobtrusive way. The application can finish sentences and complete entire paragraphs based on only a few words. This can prove to be jarring to the user. In the example below, Textio moves the user input into a text bubble, while placing its suggested text inline into the content. The suggested text is also marked with an icon at the end.

3) Let robots be robots; Let humans be humans

Early AI automation often completely replaced human tasks. The ideal today is AI and humans each focusing on their strengths.

For example, an AI agent may handle initial customer service triage. But human agents provide empathy and complex problem resolution.

Applications should re-orient user flows to maximize human abilities like emotional intelligence, creativity, and judgment. AI handles repetitive and data-heavy tasks.

For example, instead of having salespeople manually enter data, have AI fill templates and humans review and customize. Or AI identifies initial product defects, while engineers determine root causes.

The best human-AI collaboration minimizes human involvement in automated tasks. This allows people to focus on high-value work only humans can provide.

4) Account for inconsistency in results

Unlike traditional software, AI systems can produce inconsistent outputs given the same inputs. Machine learning models rely on finding patterns in huge volumes of training data. As new data is added, model predictions evolve.

For example, researchers at Mount Sinai’s Icahn School of Medicine found that the same machine learning algorithms diagnosing pneumonia in their own chest x-rays did not work as well when applied to images from other hospitals. The smallest variations in how x-rays were taken and processed resulted in vastly different results once the images were fed through the AI engine.

Another example that most consumers have experienced is saying the same thing twice to their voice assistant or ChatGPT and receiving two very different responses. The machine learning models that power our voice assistants and LLMs are continuously tweaked and improved, meaning the same input can result in a completely different output tomorrow compared to today.

Governance practices like monitoring data drift can detect when inputs change enough to impact model accuracy. Periodic model retraining and testing can catch performance regressions.

Applications should provide context around AI-powered features to set expectations around inconsistencies. For example, a churn prediction model may explain its confidence level in a prediction.

Educational tips can help users understand why AI results are not deterministic like traditional code. With transparency and understanding, users will better trust AI systems despite inconsistencies.

5) Provide an escape route for when AI fails

Despite advances, AI systems still make mistakes in complex real-world environments. New techniques like uncertainty quantification can estimate when a model is likely to fail. But unknown unknowns remain.

Applications should ensure users are not trapped when the AI fails. For example, a conversational AI assistant should allow easy escalation to a human agent. Providing natural language overrides also helps, e.g. letting users say “I want to speak to a person”.

Any actions initiated or recommended by an AI system should have a confirmation step before finalizing the action. For example, if an AI scheduling assistant books a meeting, the user should get a confirmation popup to approve sending the meeting invites. Without a confirmation step, users do not have an escape hatch to correct or abort actions taken autonomously by the AI.

Providing an override option is important even if the AI is highly accurate most of the time. Users need reassurance that the system won’t go rogue and run amok with automation. The confirmation step also helps build understanding – the user gets a moment to see why the AI took a certain action and confirm it aligns with the user’s own thinking.

In addition to confirmations, users should be able to undo completed actions if the AI made an incorrect choice. For example, the ability to cancel sent calendar invites with one click. Frictionless reversal of AI-initiated actions gives users confidence they remain firmly in charge of the human-AI collaboration.

Summary

Integrating AI into enterprise applications presents challenges but also huge opportunities. Following these UX guidelines will pave the way for seamless human-AI collaboration.

Notify users of AI automation, provide transparency into AI logic, and design interfaces focused on human abilities. Account for inconsistencies, and give control back to users when AI falls short.

With great power comes great responsibility. Thoughtful AI implementation demands considering user trust and adoption every step of the way.

The rewards for getting it right are immense. AI-powered apps create smarter, more assisted human work. They free people to focus on creativity, strategy and meaningful connections.

The time is now. Reimagine your enterprise apps with an AI-first mindset. Lead your users – and industry – into an intelligent future. Commit to ethics, insight and empowerment. Together, build technology that augments lives.

If you want to take advantage of AI in your digital products, but don’t know where to start, check out our top five ways to employ AI in your enterprise web and mobile app strategy.